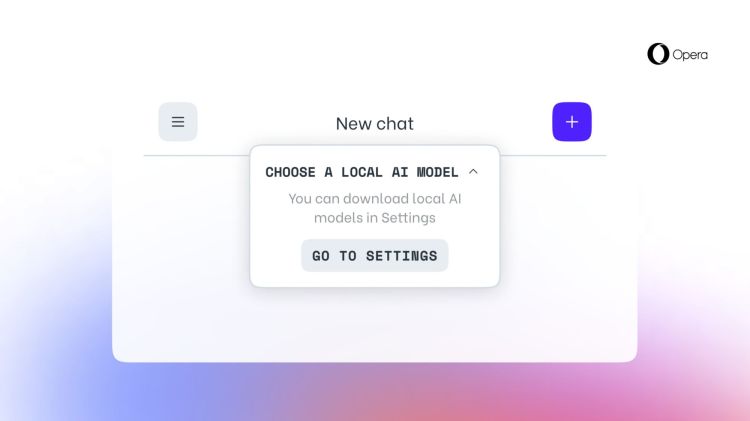

declares the opera in one Blog post To implement local LLMs in One and Opera GX browser versions. Opera already implemented this in April in the One Development browser, which, however, is intended for developers only (Swiss IT Magazine reported). Installing local LLMs should enable users to switch between different AI providers with just a few clicks. Opera wrote that on-device AI support will be available for all Windows, MacOS, and Linux devices. However, the company remains silent about the time horizon in which this innovation is planned. The blog post simply says that we are preparing this functionality to leave the Early Access beta phase.

Opera explains that in the future, users will be able to freely choose which AI they want to use in their familiar environment – their browser. In addition, privacy is maintained because requests are not transmitted to the server. The disadvantage is that the response speed depends on the computing power of your computer.

Opera wants to make its AI models available to all users as easily as possible. The company wrote in the post that it has a vision that all Opera users will also be AI users. (document)

“Social media evangelist. Baconaholic. Devoted reader. Twitter scholar. Avid coffee trailblazer.”

More Stories

Longest jets in the universe discovered – giant particle streams as long as 140 Milky Way galaxies in a row

New method reveals 307 supernova remnants

Snapchat is upping the ante on augmented reality glasses